Navigating the Ethics of AI Language Models

Artificial Intelligence (AI) has advanced significantly in recent years, particularly in the field of natural language processing (NLP), leading to the development of sophisticated text generators like ChatGPT. While the potential for such technology to benefit society in various ways is enormous, there are also concerns about its uncertain future and potential negative impacts on industries, individuals, and society as a whole

These AI’s have been around the internet for a very long time. Textsynth, Inferkit, Talk to Transformer – this is an idea that programmers and the internet at large have been interested in for years. But all the previous examples have been largely toys. They’re fun to play around with, and that’s because they aren’t exactly good at their job. But this has changed, OpenAI’s language model, ChatGPT, pushes the realm of AI to the next level. It is no longer a toy – AI content generation might just be the future. And what that future looks like could change everything.

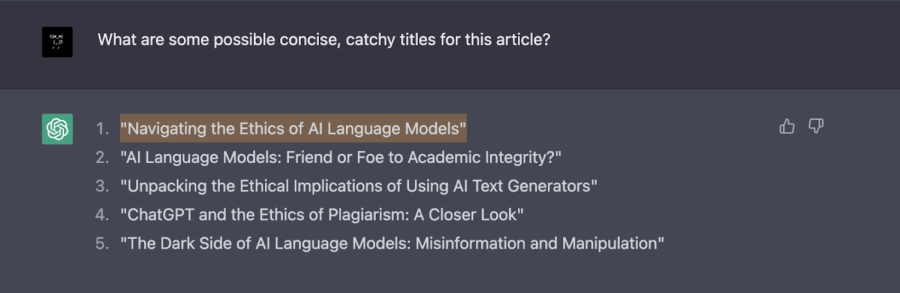

If you’re understandably skeptical about this, take another look at the first paragraph of this article. Though not exactly outstanding, it seems reasonable, does a pretty good job introducing the concept of AI to the article, and, most importantly, you thought I wrote it. That would be incorrect. That introduction was what ChatGPT responded with when I asked it to write an article about the future of AI text generators such as itself. What I haven’t shared is the entire rest of the article, a 500 word opinion piece that goes over numerous points about AI and its role in society. If that unsettles you, it should. It almost makes me wonder: If an AI can write the article I’m writing in seconds, why should I write it at all?

This raises the problem of academic plagiarism using AI.

Step 1: Open chat.openai.com on any browser, and create a free account if you haven’t already.

Step 2: Open the assignment in question and see what it’s asking for. It’s preferable if it’s purely written, such as an essay.

Step 3: Tell ChatGPT the parameters of your assignment, and ask it to complete it. The more specific, the better, but it will always try its best to work with what you’ve got.

Step 4: Ctrl+C, Ctrl+V.

This process can take less than a minute.

On a societal and cultural scale, you’ll find very few people who think this is a good thing – the problems aren’t difficult to figure out. But on an individual scale, to the student who procrastinated an assignment to the last minute or would rather hang out with friends, the temptation of an unlimited free assignment-completer is extremely enticing. It’s almost difficult to blame them – in many cases, it is simply the most efficient way to complete the task. But any teacher will vouch that efficiency is not the goal, rather, students should want experience. Once again, though, very few will disagree – it’s the pure temptation of instant completion of an assignment that draws students, not any kind of moral or long-term reasoning.

A site called GPTZero was created and rose in popularity since the creation of ChatGPT. Its slogan is “Humans Deserve the Truth,” and the site has a very simple function: When supplied with text, it scans it, and dictates how likely it is that it was written by an AI. Like ChatGPT, it is free and only requires an account.

Plugging in the first paragraph of this article, “Your text is likely to be written entirely by AI.” Plugging in the second paragraph of this article, “Your text is likely to be written entirely by a human.” This is something very important for any teacher to know if they suspect AI plagiarism. But of course, it’s not that simple – the main problem is that if a student wants to use ChatGPT, they could in theory have it write their assignment, but then paraphrase the entire thing. In that case, it’s extremely difficult to track down. Going further, what if a student, with full intention of being academically honest, simply asks ChatGPT for help as if it was a person? The site is entirely receptive to that kind of input too.

“I’m having trouble coming up with prominent literary devices in Hamlet, could you help me?”

“Certainly! Hamlet is a rich and complex play that contains numerous literary devices. Here are some prominent ones to consider:

1. Soliloquy: Hamlet’s numerous soliloquies throughout the play reveal his innermost thoughts and feelings, and provide insight into his character and motivations.

2. Foreshadowing: There are several instances of foreshadowing in the play, such as the appearance of the ghost of Hamlet’s father and Hamlet’s premonitions of his own death.

3. Irony: The play is full of irony, such as the fact that Hamlet’s attempts to avenge his father’s murder ultimately lead to his own downfall.”

Alright, so a student could ask ChatGPT for help, rather than for plagiarism. Is this cheating? What if I ask it to write me an outline for an essay, but write the actual essay myself? What if I ask it to write my assignment, but only to use as inspiration, or to see an example? What if I plug in my essay and ask it to give feedback? What if I ask it to make a study guide for me which I use to learn the materials? What if I ask it to find sources for my assignment rather than searching for them myself? What if I can’t understand a given text, so I give it to the AI and ask it to summarize or analyze? The lines are becoming blurred. We can all agree that copy and pasting the assignment is cheating, but if you get creative with your prompts, ChatGPT can do a lot of other things. How do those stand? When is one using it too much? There are tons of other tools that help students – Grammarly, EasyBib, NoodleTools, even just Google – where is the line between helping and cheating?

It’s worth noting that ChatGPT isn’t perfect, though. Firstly, anything it writes, with very little exception, is extremely basic. The first reservation I had about including the first paragraph of this article is that it’s very generic, and not quite my writing style. As a general rule of thumb: ChatGPT can do anything, but that doesn’t mean it can do it well. One can pick up on its writing style after a while, perfectly bland and predictable, always the same ideas and logic. It sort of feels less like human writing and more like the average of all human writing, and hey, that’s actually exactly what it is. Though it is indeed scary what it can do, upon further inspection, it isn’t quite the talent-destroyer you might have heard about. But this doesn’t mean it will never get there. Maybe it will take decades, maybe it will take a couple months – never before in human history has technology progressed so rapidly, humans haven’t had a chance to catch up. For the purposes of this article, let’s assume AI will continue to advance without leveling off.

Where does that leave us? Let’s hear an expert’s thoughts: “The ethical issues related to the use of AI language models lie in the actions and decisions of the humans who use these tools, rather than the tools themselves,” says ChatGPT, “AI language models like myself are designed to generate text based on the input that we receive from users. We do not have any inherent ethical or moral values, nor do we make decisions about how our output is used.”

It can be difficult to take what an AI says about AI seriously. We feel like only we humans should be the ones having this discussion about humans, and we can’t guarantee that an AI has the same values or motives as us. In fact, ChatGPT itself often clarifies that it is incapable of having its own morals or opinions, and to take what it says with a grain of salt, but I think it still has a point here.

AI is more than capable of helping us in what we do, the question is how much help we want. How much do we value a human’s role in certain tasks? We’re fine with robots cleaning our floors or organizing our spreadsheets, but how far until we are uncomfortable? Should AI help us communicate? Analyze? Create? Think? And perhaps a more pressing question: How valid is our comfort in this equation? Comfort is not based on truth, efficiency, or morality – it’s based on culture. And culture is wrong all the time.

In a tactical sense, we should accept AI because it is simply not going away. We humans are powerful because we make tools – this is true in the Stone Age and is still true today. A hammer is helpful because it can hit harder than a human can. It makes sense that we would seek a tool that makes tools better than we can. If the solution to a problem is a tool, then the solution to a lack of solutions is a tool that solves for tools. So regardless of the issues it causes us, it isn’t going away. It’s just the natural progression of technology that humanity is inclined towards.

In a moral sense, we have to remember that with great power comes great responsibility. It’s easy to fall into the trap of simply labeling AI as either good or bad – there is a growing social media movement against any kind of AI created work. The movement absolutely has a basis, addressing concerns such as copyright and unemployment, but as with many things, a healthy dose of both sides is the most helpful. We have to work with AI, not against it. Going back to the hammer analogy, a hammer used carelessly or maliciously can absolutely be used to destroy or hurt – but with a little bit of thought, it can be used to create.

The question at the end of it all is simple: Is advanced AI safe? Will it really take all of our jobs, ruin creativity, and take us over? Or will it be a massive tool that helps humanity in its endeavors? I don’t have an answer. It can be difficult to tell the difference between fear of change and actual analysis. We as a species are notoriously bad at that.

Many have pointed out that one of the largest problems with AI development is it’s all being done by companies that seek profit. All these questions about AI ethics and safety are important, but are the people developing this technology asking them? Currently, Google is in the public experimentation phase of their own NLP system called Bard. Other large companies are also almost certainly working on their own chat bots, improving on their models will be paramount to profit.

Though it’s annoying, the truth is that there are few knowable answers – it’s all speculation and prediction. This is territory entirely foreign to humans, we truly don’t know how far AI will go and what it will do. We know that at present it is maybe in the process of changing a lot of what we know about work and creativity, but it’s not quite there yet. In the meantime, we have to remember to stay open minded while also staying cautious – this may be dangerous, but it may also be the future whether we like it or not.

DJ Nguyen is a junior with an interest in language and computer science. He is in his 2nd year with The Viking Press and is a brand new editor for the...